Before we talk about how AI Quality Gates are reshaping the translation industry, imagine this situation.

You deliver a translation you’re confident in. Terminology is consistent, tone is right, meaning is accurate. Then, instead of feedback from a reviewer, you receive an automated notice:

“Your translation scored 78.4% on our AI Quality Gate. Revision required.”

No explanation. No human comments. Just a number — and an unspoken warning that your future assignments may depend on it.

This is no longer hypothetical. Large LSPs and enterprise localization teams are already using AI Quality Gates internally. By 2026, they will be standard across high-volume translation workflows.

If MTPE changed how translators work, AI Quality Gates will change how translators are judged. And that shift is far more disruptive.

What are AI Quality Gates in translation?

AI Quality Gates are automated evaluation systems that assess translation quality before a human reviewer ever sees the content. They generate a score based on multiple criteria, including:

- accuracy and meaning preservation

- fluency and grammatical correctness

- terminology consistency

- style and register adherence

- severity of detected errors

- overall risk level

If the translation meets the predefined threshold (often between 85% and 90%), it passes. If it doesn’t, the text is flagged, revised, or rejected.

Think of AI Quality Gates as airport security for language: nothing moves forward unless it clears the scan.

Why AI Quality Gates are spreading so fast

LSPs are adopting AI Quality Gates for three structural reasons.

1. Cost pressure

Human QA is expensive and slow. AI scoring scales instantly at a fraction of the cost.

2. Volume and speed

Enterprise localization involves millions of words per month. AI can evaluate everything. Humans cannot.

3. Consistency

AI applies the same rules every time. Human reviewers vary in strictness, focus, and fatigue.

This evolution aligns with broader trends already discussed in NovaLexy’s analysis of AI translation jobs in 2026, where evaluation — not translation — becomes the bottleneck:

AI Translation Jobs in 2026: Who Wins, Who Loses, Who Adapts

Why AI Quality Gates matter more than MTPE

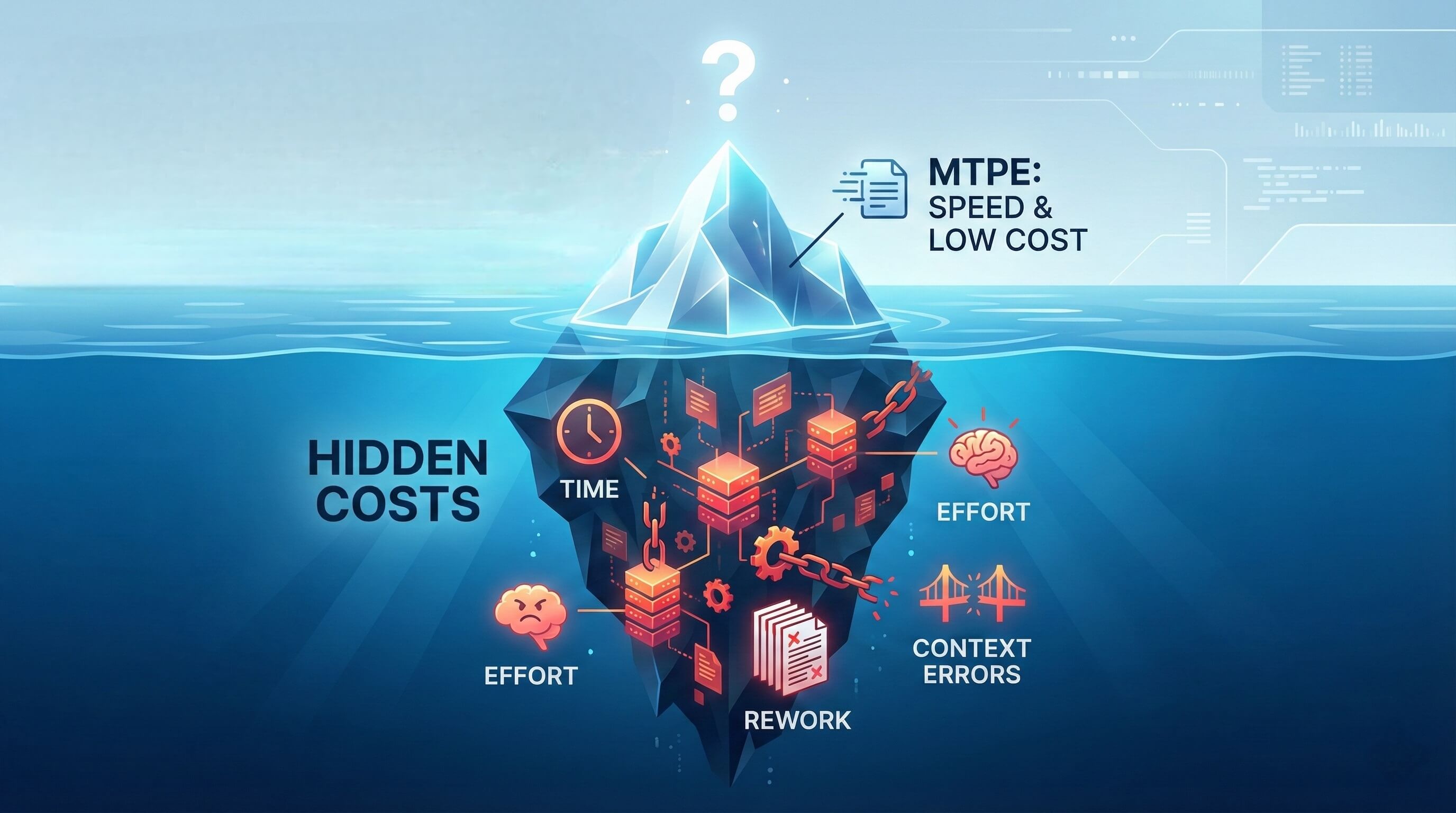

MTPE changed how translators work.

AI Quality Gates change whether translators get work.

Because now:

- your output is scored automatically

- your score influences assignment allocation

- your rate may depend on algorithmic thresholds

- your reputation becomes a metric

This is a shift in power. Translators are no longer judged primarily by humans, but by systems trained on statistical expectations.

This concern connects directly to the question raised in Will AI Replace Translators?. Replacement isn’t the only risk. Automated judgment is.

How AI Quality Gates actually score translations

Most AI Quality Gate systems rely on a combination of techniques.

1. Quality Estimation models

These models predict translation quality without comparing against a human reference.

2. AI reference comparison

Your translation is compared against an AI-generated “ideal” output.

3. Error classification

Errors are categorized by type: accuracy, terminology, grammar, or style.

4. Severity weighting

Not all errors are equal. Critical meaning shifts are penalized more heavily.

5. Threshold logic

Scores determine outcomes:

- <85% → fail

- 85–90% → revision required

- >90% → pass

The result is a system where translators must optimize for both human readers and algorithms.

The hidden risks most translators underestimate

Creativity penalties

Expressive phrasing may score lower than literal output if it diverges from the AI’s expectation.

Domain misunderstanding

Legal, medical, and marketing nuance is often misinterpreted by generic models.

Bias toward machine-like language

Safe, predictable phrasing frequently scores higher than natural human variation.

Regional variation issues

UK vs. US English, Brazilian vs. European Portuguese, or LATAM vs. Castilian Spanish can trigger false penalties.

Paradoxical MTPE punishment

Ironically, improving MT output “too much” may be flagged as deviation.

How AI Quality Gates will affect translator rates

This is the uncomfortable reality:

AI scores will be used to justify rate stratification.

- 95%+ → premium tier

- 85–94% → standard tier

- <85% → heavy post-editing category

This mirrors the economic shift already seen with MTPE, discussed in AI Translation and the Hidden Cost of Automation.

Which translators will thrive in this system

- terminology-driven professionals

- deep domain specialists

- translators who understand AI evaluation logic

- those with direct client relationships

- hybrid linguists who combine AI efficiency with human judgment

How to adapt before 2026

- learn how AI evaluates quality

- prioritize terminology consistency

- apply creativity strategically, not everywhere

- specialize in high-risk domains

- pre-check your work before automated QA

Platforms like NovaLexy Playground help translators simulate real evaluation scenarios and understand how quality is assessed — before a client’s system does.

The future: AI Quality Gates with human accountability

AI Quality Gates won’t eliminate translators.

They will eliminate inconsistency.

The translators who succeed will be those who understand how machines evaluate language — and who know when to override them with human judgment.

Frequently Asked Questions

AI Quality Gates are automated checks that score a translation before it moves forward in a workflow. They can evaluate accuracy, fluency, terminology, consistency, and style adherence. If the score is below a threshold, the text gets flagged for revision or rejection.

They can. Many companies will use AI scores to label translators as “premium” or “standard,” and then justify different pay levels. The risk is that a single score can influence rates even when the translation is acceptable to humans. Translators who keep high consistency and low error rates will usually protect their position better.

Focus on terminology consistency, avoid unnecessary rewrites, keep numbers and meaning tightly aligned with the source, and follow the client’s style rules exactly. The goal is not to sound like a machine, but to remove the kinds of variations that automated systems often mistake for errors.