Fluent does not mean correct.

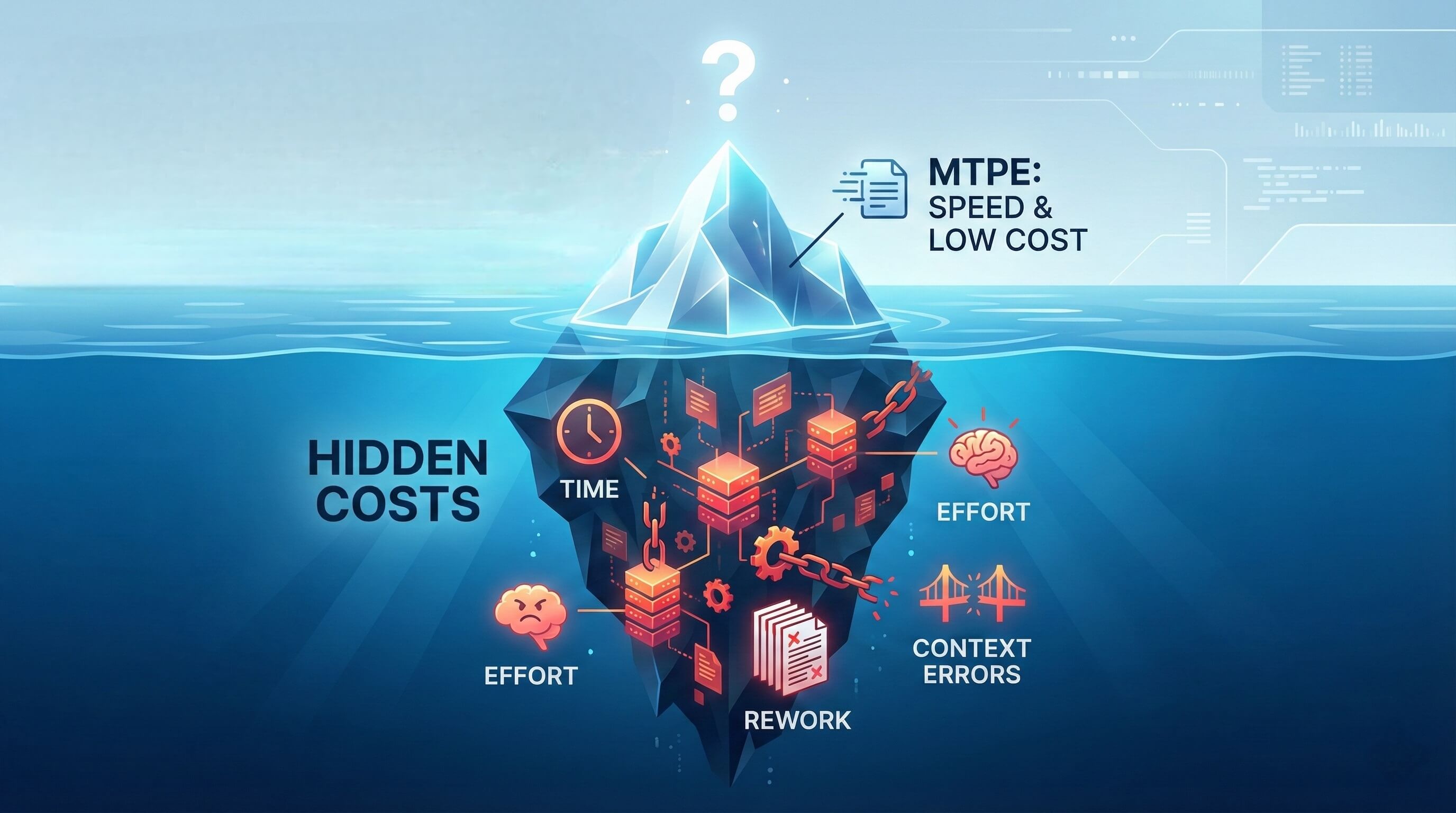

ChatGPT and DeepL can produce translations that look polished in seconds. That’s exactly why they can be dangerous in professional workflows: fluency can hide the errors that actually cost money — meaning drift, missing constraints, wrong terminology, and tone that damages trust.

This is a translation quality checklist you can run in 10–15 minutes on any translation — human, student, agency, or AI output. It’s designed for real professional scenarios: client delivery, high-stakes content, localization, and quality assurance.

Why “translation” and “translation evaluation” are two different jobs

Tools like ChatGPT and DeepL are primarily built to generate translations. Professional teams, however, must also evaluate translations — systematically.

Evaluation answers questions like:

- Did the meaning change (even slightly)?

- Is anything missing, added, or softened?

- Is terminology correct for this domain and consistent?

- Does the tone match the audience and purpose?

- Is there legal, medical, compliance, or reputational risk?

This “quality gate” mindset is becoming standard as AI drafts become normal. If you want the bigger industry picture, see: The rise of AI quality gates.

The 2026 Translation Quality Checklist (run it in this order)

Order matters. Start with meaning. Don’t waste time polishing style on a translation that’s conceptually wrong.

1) Meaning accuracy (the non-negotiable)

- Does the translation preserve the same message and intent?

- Did any negation flip (not / never / unless / without)?

- Did certainty change (must → should, will → may)?

- Did cause/effect shift or become vague?

Fast test: Read the source once. Then read only the translation and write a 1–2 sentence summary. If the summary doesn’t match the source, you have meaning drift.

2) Omissions & additions (the silent killers)

- Any missing numbers, units, dates, names, or constraints?

- Any new claims that were not in the source?

- Any qualifiers “smoothed out” to sound nicer?

Why AI gets you here: ChatGPT/DeepL can produce a beautiful sentence while quietly dropping a condition. It reads well — and that’s the trap.

3) Terminology & domain fit

- Are key terms correct for this industry (legal/medical/technical)?

- Are terms used consistently across the entire text?

- Do you need a glossary or client style guide alignment?

If you’re translating content that also needs to perform in search, terminology choices overlap with international SEO. See: Localize keywords for international SEO.

4) Register, tone & audience match

- Is it too formal or too casual for the target audience?

- Does the voice match the brand (confident, warm, clinical, persuasive)?

- Does it read like a native professional wrote it?

For marketing and UX, tone is conversion. “Perfect” literal translation can still fail commercially. Related: Why perfect translation can kill conversions.

5) Fluency (only after meaning is safe)

- Any awkward literal phrasing or unnatural collocations?

- Any “translationese” rhythm?

- Any sentence that sounds correct but not native?

Pro move: read it out loud. If you stumble, your reader will too.

6) Consistency, formatting & conventions

- Dates, decimals, currency, units localized properly?

- Punctuation and capitalization consistent?

- Headings parallel, lists consistent, terminology stable?

7) Risk pass (the “would this hurt us?” check)

Flag anything that would be expensive if wrong:

- legal obligations, disclaimers, warranties

- medical dosage and safety instructions

- financial figures, deadlines, contractual terms

- claims that could be seen as misleading

How to audit ChatGPT and DeepL translations without getting fooled

ChatGPT and DeepL are searchable because people use them daily — but professionals know a hard truth: fluent AI output can still be wrong.

Use the checklist above, and add these three AI-specific checks:

A) Confidence laundering check

If the translation sounds extremely confident, verify it line-by-line against the source. AI can “sound right” even when it’s not.

B) Consistency drift check

Search the translation for key terms (product names, features, legal terms) and confirm they remain stable across the document.

C) False nuance check

Watch for tone shifts: AI may accidentally soften, intensify, or modernize meaning in ways the source did not intend.

If you want a broader map of where AI translation helps and where it breaks, see: AI translation: what it gets right, what it gets wrong.

Where NovaLexy is different (and why it matters)

Here’s the cleanest way to think about it:

- ChatGPT and DeepL are built to generate translations.

- NovaLexy Playground is built to evaluate translations.

Professional translators, agencies, and students don’t only need output. They need to know if the output is safe to deliver. That’s the gap NovaLexy targets: a structured evaluation layer that helps you catch meaning drift, omissions, tone mismatches, terminology inconsistency, and risk.

If you want to try the evaluation workflow directly, start here: NovaLexy Playground.

The 10-minute professional workflow (save this)

- Meaning scan: summary test + negation/constraint check

- Numbers & entities: dates, figures, names, units

- Terminology pass: consistency + domain correctness

- Tone pass: register and audience fit

- Fluency polish: read aloud, remove translationese

- Risk pass: anything costly if wrong

Bottom line

ChatGPT and DeepL are powerful drafting tools. But professional translation requires professional evaluation — and evaluation is a different job than generation.

Use this translation quality checklist every time, and you’ll catch the errors that most people miss — the ones that make the difference between “looks good” and “safe to deliver.”